docker swarm 就是把多個 docker 主機變成一個叢集使用

優點

Cluster management integrated with Docker Engine: Use the Docker Engine CLI to create a swarm of Docker Engines where you can deploy application services. You don’t need additional orchestration software to create or manage a swarm. 不須其他工具就能完成 cluster 工作

Decentralized design: Instead of handling differentiation between node roles at deployment time, the Docker Engine handles any specialization at runtime. You can deploy both kinds of nodes, managers and workers, using the Docker Engine. This means you can build an entire swarm from a single disk image. 去中心化設計,將部屬節點以 role 區分,而不以 image 區分 簡化部屬難度

Declarative service model: Docker Engine uses a declarative approach to let you define the desired state of the various services in your application stack. For example, you might describe an application comprised of a web front end service with message queueing services and a database backend. 使用陳述式部屬 service (docker-compose)

Scaling: For each service, you can declare the number of tasks you want to run. When you scale up or down, the swarm manager automatically adapts by adding or removing tasks to maintain the desired state. 可自由調整節點數量

Desired state reconciliation: The swarm manager node constantly monitors the cluster state and reconciles any differences between the actual state and your expressed desired state. For example, if you set up a service to run 10 replicas of a container, and a worker machine hosting two of those replicas crashes, the manager creates two new replicas to replace the replicas that crashed. The swarm manager assigns the new replicas to workers that are running and available. 自動恢復期望狀態 當有 containner 故障時,自動修復

Multi-host networking: You can specify an overlay network for your services. The swarm manager automatically assigns addresses to the containers on the overlay network when it initializes or updates the application. 可設定 overlay network 跨主機網路

Service discovery: Swarm manager nodes assign each service in the swarm a unique DNS name and load balances running containers. You can query every container running in the swarm through a DNS server embedded in the swarm. 內建 DNS 功能

Load balancing: You can expose the ports for services to an external load balancer. Internally, the swarm lets you specify how to distribute service containers between nodes. 內建 load balancing 功能

Secure by default: Each node in the swarm enforces TLS mutual authentication and encryption to secure communications between itself and all other nodes. You have the option to use self-signed root certificates or certificates from a custom root CA. 預設加密

Rolling updates: At rollout time you can apply service updates to nodes incrementally. The swarm manager lets you control the delay between service deployment to different sets of nodes. If anything goes wrong, you can roll back to a previous version of the service. 自動滾動更新功能 不須人工介入

節點

新增

manager node 1

[root@node1 ~]# docker swarm init --advertise-addr 192.168.15.141

Swarm initialized: current node (r8erv03rgzp5zxqm1xcshqdyl) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-2gabg98ff8uiju4una8jmvg3q8zymne6onkpydl0jbbzot0o09-5nhsrdbzq4dvlpp1t23cjx93y 192.168.15.141:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

# 執行 docker swarm join-token manager 產生 token 允許增加 manager node

[root@node1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-2gabg98ff8uiju4una8jmvg3q8zymne6onkpydl0jbbzot0o09-4wz4235g7e1a1pdgtcvdh406z 192.168.15.141:2377

manager node 2

[root@node2 ~]# docker swarm join --token SWMTKN-1-2gabg98ff8uiju4una8jmvg3q8zymne6onkpydl0jbbzot0o09-4wz4235g7e1a1pdgtcvdh406z 192.168.15.141:2377

This node joined a swarm as a manager.

[root@node2 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

r8erv03rgzp5zxqm1xcshqdyl node1.local Ready Active Leader 20.10.12

m158ryu3mk3zyvpyl777fud0j * node2.local Ready Active Reachable 20.10.12

worker node 1

[root@mgmt ~]# ssh root@192.168.15.144

Last login: Mon Mar 7 10:36:36 2022 from 192.168.15.100

[root@node4 ~]# docker swarm join --token SWMTKN-1-2gabg98ff8uiju4una8jmvg3q8zymne6onkpydl0jbbzot0o09-5nhsrdbzq4dvlpp1t23cjx93y 192.168.15.141:2377

This node joined a swarm as a worker.

再次顯示 join token

docker swarm join-token worker

docker swarm join-token manager

節點 維護

有三種狀態 “active”|“pause”|“drain”

drain : 漂走 service,新的 service 也不會在此節點運作,standalone container 不受影響 另外也可以將 manager node 改成 drain 狀態 讓其 always 只負責 manager 功能

pause: service 持續運作

節點 移除

docker swarm leave --force

docker swarm shared storage

containner 要儲存資料時,需要一個持續性空間 而多台 docker node 為了要讓每個節點都能夠存取同份資料 可以使用 shared storage

可參考使用 ocfs2 GFS2 gluster

以效能來說 ocfs2 最強

service control

https://docs.docker.com/engine/swarm/ingress/ depoly

- The

docker service createcommand creates the service. - The

--nameflag names the servicehelloworld. - The

--replicasflag specifies the desired state of 1 running instance. - The arguments

alpine ping docker.comdefine the service as an Alpine Linux container that executes the commandping docker.com.

[root@node1 ~]# docker service create --replicas 1 --name helloworld alpine ping docker.com

jmz6nfsabxz0nxa6qmxya6su6

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

# Run docker service ls

[root@node1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

jmz6nfsabxz0 helloworld replicated 1/1 alpine:latest

** Inspect** (檢查) a service on the swarm

[root@node1 ~]# docker service inspect --pretty helloworld

ID: jmz6nfsabxz0nxa6qmxya6su6

Name: helloworld

Service Mode: Replicated

Replicas: 1

Placement:

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: alpine:latest@sha256:21a3deaa0d32a8057914f36584b5288d2e5ecc984380bc0118285c70fa8c9300

Args: ping docker.com

Init: false

Resources:

Endpoint Mode: vip

Scale

docker service scale helloworld=5

remove

docker service rm helloworld

rolling updates

建立

# 建立

docker service create \

--replicas 3 \

--name redis \

--update-delay 10s \

redis:3.0.6

–update-delay :更新間隔 –update-parallelism :同步更新數量 預設1 更新失敗時 預設會暫停

[root@node1 ~]# docker node update --availability drain node4.local

node4.local

[root@node1 ~]# docker node inspect --pretty node4.local

ID: mbmizzrjmmr2c8ixiz13admto

Hostname: node4.local

Joined at: 2022-03-07 03:21:41.110232554 +0000 utc

Status:

State: Ready

Availability: Drain

Address: 192.168.15.144

Platform:

Operating System: linux

Architecture: x86_64

Resources:

CPUs: 2

Memory: 1.774GiB

Plugins:

Log: awslogs, fluentd, gcplogs, gelf, journald, json-file, local, logentries, splunk, syslog

Network: bridge, host, ipvlan, macvlan, null, overlay

Volume: local

Engine Version: 20.10.12

# 復原

docker node update --availability active

Publish a port

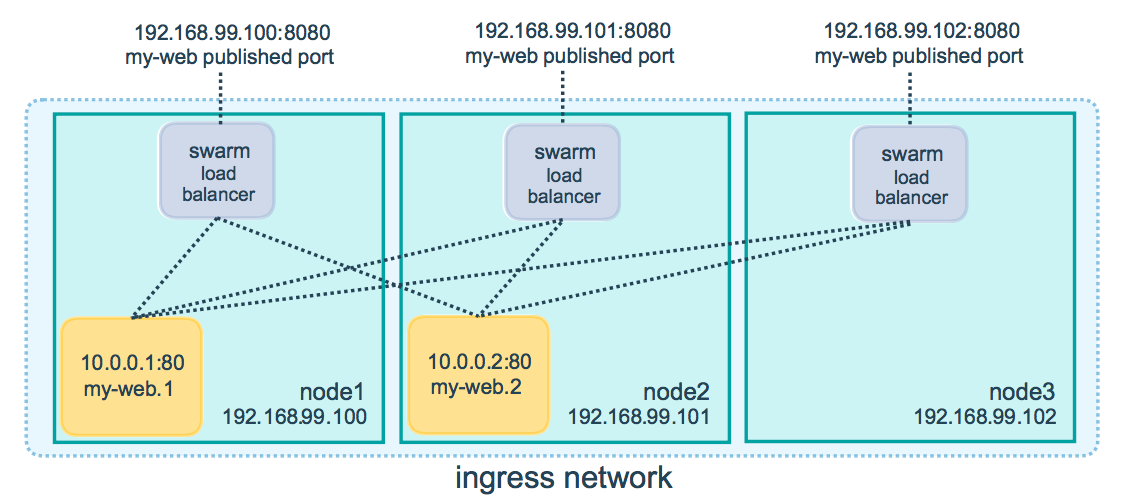

每個 node 的 8080 port 都會提供改此 service 使用 並且內部有 mesh

docker service create \

--name my-web \

--publish published=8080,target=80 \

--replicas 10 \

nginx

#或是

docker service create \

--name my-web \

-p 8080:80 \

--replicas 3 \

nginx

UDP 與 TCP

docker service create --name dns-cache \

--publish published=53,target=53 \

--publish published=53,target=53,protocol=udp \

dns-cache

docker registry

docker 存放 containner image 的地方稱為 registry docker hub 即為一個 registry

企業內部可能會認為自建一個 registry 比較安全

這時可使用官方的 docker registry server

# 建立

docker service create \

--name registry \

-p 5000:5000 \

registry

# Pull some image from the hub

docker pull nginx

# add tag to image

docker image tag nginx localhost:5000/nginx_v1

# Push it

docker push localhost:5000/nginx_v1

# 查詢 registry server image

curl -X GET http://localhost:5000/v2/_catalog